AI Home Speakers’ Potential for Podcast and News Discovery

How Apple HomePod and Amazon Echo Could Improve the Audio Services’ Experience

A couple of years ago, I received an Amazon Echo as a gift. It was exciting to see a bold approach to a home speaker that you could talk to. Those were scenes we only saw in sci-fi movies.

From looking at the early promo videos, the device seemed redundant, however. Smart phones’ assistants could also do most of the use cases. I wondered what’s the point of asking “Alexa, how’s the weather today?” if I could also press the phone button and just say “How’s the weather today?”

But beyond whether the actions could be done with a phone or not, the experience on the Echo felt, unexpectedly, very different than any mobile assistant.

The first stunning difference was how natural the voice sounded compared to mobile assistants. The text-to-speech technology was clearly superior but what also made a significant difference was the size of the speaker with louder volume. It gave the voice a sense of presence in the room you never felt with any phone.

The last subtle distinction was that you didn’t need to press a button. The hands-free experience made the interactions feel much smoother and almost human.

Amazon managed to deliver an impressive voice input/output UI that was better than anything out there at that time. But, the engine under the fantastic voice interface was very shallow.

The questions you could ask had to be preset by Amazon or explicitly added as skills. The device didn’t have an advanced NLP (Natural Language Processing) that could figure out the answers to new kinds of questions.

The reason behind this limitation is not bad engineering but rather constraints of the NLP field. Machine Learning progress achieved an impressive performance for speech recognition with a pretty high accuracy. But once the voice is transformed into text form, AI softwares get stuck trying to make sense of it. When the questions with their associated actions are not predefined, AI engines directly trigger a default instruction such as a web search or some form of “I’m sorry, I don’t know how to do that”.

Machine Learning applied to NLP has not yet achieved the same breakthrough as in speech or image recognition. Some argue, ML is not the appropriate approach to NLP, and some think it is. Regardless of what is the best trajectory towards a real NLP, it is clear that we are still far behind.

The question worth looking at though is how can we make the best out of the current voice devices, given the present limitations of the AI field?

Amazon decided to solve that problem by creating the Alexa Skills Kit which gives developers the tools to build voice applications beyond the standard ones. Google later followed the same model and called their skills Actions.

That approach actually solves two problems. First, it’s a workaround for the NLP limitation. Second, it’s a way to create the required integrations with other systems such as lights, thermostats and any other backend service. Those integrations will always be needed regardless of the advances in NLP.

Even if, one day, AI reaches an understanding of intent with 99% accuracy, it would still require an explicit piece of code to instruct it what to do once it understands what people are asking it to do.

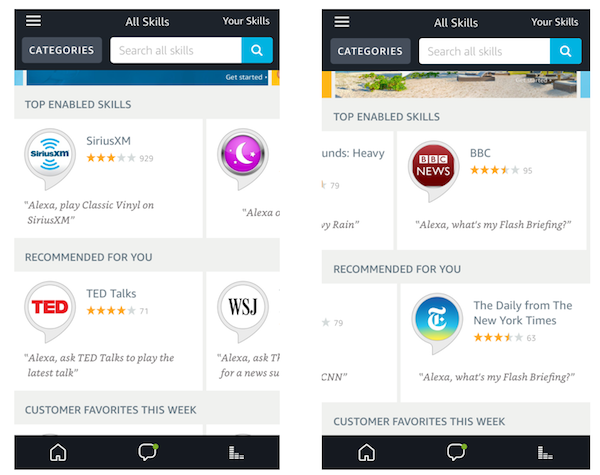

Presently, Amazon reports 15,000 skills in the store. Each one has to be manually installed and requires a precise trigger phrase that initiates the corresponding interaction.

The current skills approach “a la App Store” is a good starting point, but is it the best possible user experience with the technologies we have today?

There are 2 paths to improve the overall capabilities and both can be pursued in parallel.

1. Horizontal enhancements

The smart home speakers are intrinsically voice machines. Having to go back to the phone App, browse the store and manually install new skills dilutes the magic.

Given that the Echo store has 15,000 skills, why not provide an “auto-skill” option? Once activated, it would let Alexa search the store each time it encounters a request it doesn’t know, then pick the most likely skill to activate.

This could be achieved by combining manual curation with an algorithmic selection. Amazon could start by making editorial decisions to select the best skills for popular requests, then auto-activate them the first time a customer asks a related question.

For the less popular long tail applications, it could use an algorithm to pick a skill with the best ratings, highest activations in a given location, or a combination of the two. The results could later be changed by users if the default selection is not optimal. Over time, Amazon would get crowdsourced data on what the most popular adjustments are to its default selection, and the quality of “auto-skill” would continuously improve for other users.

It seems Amazon has already started exploring this route. For some requests, Alexa responds with “The skill X could help with that, do you want me to activate it?”. The number of skills that get automatically suggested is insufficient at the moment, but it’s a good start.

This approach won’t always work correctly but would make the interaction flow feel much more natural and would turn Alexa into a pseudo self-learner.

2. Vertical enhancements

Those relate to a predetermined set of services that Amazon, Google and especially Apple should natively build into the devices to near perfection.

When focusing on specific verticals with a narrow context, it is feasible to build interactions that cover most of what’s needed for a sleek dialog and its related actions.

A voice device should excel at audio services. If more general skills are hard to build, there is no excuse for services naturally attuned to sound.

The top-3 are Music, News, and Podcasts. Those should be delivered with exceptional convenience.

Musicologist

When Amazon first took on music it was already pretty good. You could create playlists on the fly based on genres, activities, artists and years. Alexa could also tell you who was singing and the name of the song.

When Apple announced HomePod at the WWDC, they positioned it as a music device with high sound quality that comes with a Musicologist. During the presentation, the speaker distinct ability to adapt to the acoustics of rooms, for optimal sound, was strongly emphasized.

Siri demos were not included in the keynote but on the HomePod page, some examples are disclosed. They look similar to the Echo music capabilities.

Once the product is available later this year, we will find out if Apple raises the bar higher or keeps the music aptitudes at the same level as what Amazon has.

Regardless of the outcome, voice interactions with music are already kind of cool the way they are.

Newsologist

Amazon standardized the flash news update around a single trigger that multiple content providers can use.

Alexa, what’s my flash briefing?

For example, both the BBC and the New York Times are associated with that expression. The Echo will play all static briefings from the activated news skills in a sequence that is editable on the companion mobile App.

Comparing to music, this is a very unsatisfying experience. There is a large room for improvement with the currently available technologies.

It should have the ability to create nonlinear new stations on the fly from various sources based on the topic or requested keywords.

To achieve this, there would be no need for sophisticated NLP as the interactions would be focused on the particular news vertical and related sources.

For example “Alexa, what’s the latest briefing from country X”, “Alexa, what’s the latest tech briefing from today”, or more specifically, “Alexa, what’s the latest briefing about the Tesla 3 launch event?”.

The flow could be similar to how Apple News currently works. There is an initial configuration where media sources and topics of interest are selected. The input is then used to create a general “For You” news digest from multiple sources.

To be fair, there is much more work involved to accomplish the same for audio news. Legal agreements with media outlets would need to be sorted out to unbundle their content and label each piece with the right metadata. Ideally, they would also provide full transcripts of the audio.

If not, speech recognition could be applied to all audio news from the various content providers to enable deeper search. Speech-to-text accuracy is not perfect but it is more than sufficient for indexing purposes.

The other challenge is that audio news is sequential. In contrast, on mobile news Apps, you can have a quick overall glance at titles and photos and decide where to start first then go back to the overall list and select the next article.

With audio, it’s kind of a blind mode where the auto-selected starting point would play first with no foresight of what’s ahead.

One way to solve this is for the speakers to tell the first five titles or topics before playing the sequence. It should also provide the option to jump directly to a specific one.

Another interesting feature would be to add time constraints to the briefing: “What’s my political flash briefing in 5min?”

It is not clear whether Amazon has any strategic interest to invest in building such a service. In general, their devices are intended to serve its other businesses. A more advanced news service on the Echo may not help them much.

Apple, on the other hand, has the complete opposite strategy. Their content offerings such as music and more recently news are there to serve their hardware sales and create a tighter lock-in around their products.

So even if initially, HomePod would most probably not have any advanced news service, Apple is the most likely company to perfect it in the future.

Podcastologist

Podcasts are the missing audio piece without a native support in the Echo. I usually listen on the phone while driving or walking. Accessing the same content on the home speaker would be a great addition.

Currently, the primary source on the Echo is via TuneIn Radio integration. Requests such as “Alexa, play the latest episode of a16z podcast” do work and pull the stream directly from TuneIn. But that’s about it; there is no granular voice search related to specific topics. The experience is more basic than the flash briefings.

Even on mobile, the Podcast discovery feels like Yahoo in the 90s.

Podcasts are a gold mine of educational content provided for free by great thinkers with valuable experiences. Unfortunately, the information is fragmented and buried inside audio clips that are not easy to find.

Podcast content has a longer lifetime relevance than news. Yesterday’s news is not news but podcast episodes from 2 years ago could still be very precious.

Similar to functionalities described in the previous Newsologist section, I would love to see a smart Podcast DJ natively built in HomePod.

“Siri, what are the best podcasts about <topic> I can listen to in 60min”

“Siri, I want to learn about <topic>”

“Siri, create a playlist with all interviews with <person X> from the last 3 years”

Once again, no need for super advanced NLP, we already have the tech necessary to build a flow around such interactions with a smooth experience.

Let’s hope it won’t take too long for Apple to ship the Podcastologist.